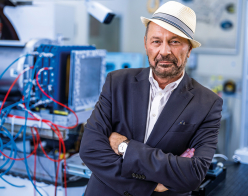

IBM’s head of science and technology urges early-career researchers to proactively involve industry in their research.

For Heike Riel, IBM fellow and head of science and technology at IBM Research, successful careers in science are built not by choosing between academia and industry, but by moving fluidly between them. With a background in semiconductor physics and a leadership role in one of the world’s top industrial research labs, Riel learnt to harness the skills she picked up in academia, and now uses them to build real-world applications. Today, IBM collaborates with academia and industry partners on projects ranging from quantum computing and cybersecurity to developing semiconductor chips for AI hardware.

“I chose semiconductor physics because I wanted to build devices, use electronics and understand photonics,” says Riel, who spent her academic years training to be an applied physicist. “There’s fundamental science to explore, but also something that can be used as a product to benefit society. That combination was very motivating.”

Hands-on mindset

For experimental physicists, this hands-on mindset is crucial. But experiments also require infrastructure that can be difficult to access in purely academic settings. “To do experiments, you need cleanrooms, fabrication tools and measurement systems,” explains Riel. “These resources are expensive and not always available in university labs.” During her first industry job at Hewlett-Packard in Palo Alto, Riel realised just how much she could achieve if given the right resources and support. “I felt like I was then the limit, not the lab,” she recalls.

This experience led Riel to proactively combine academic and industrial research in her PhD with IBM, where cutting-edge experiments are carried out towards a clear, purpose-driven goal within a structured research framework, leaving lots of leeway for creativity. “We explore scientific questions, but always with an application in mind,” says Riel. “Whether we’re improving a product or solving a practical problem, we aim to create knowledge and turn it into impact.”

Shifting gears

According to Riel, once you understand the foundations of fundamental physics, and feel as though you have learnt all the skills you can leach from it, then it’s time to consider shifting gears and expanding your skills with economics or business. In her role, understanding economic value and organisational dynamics is essential. But Riel advises against independently pursuing an MBA. “Studying economics or an MBA later is very doable,” she says. “In fact, your company might even financially support you. But going the other way – starting with economics and trying to pick up quantum physics later – is much harder.”

Riel sees university as a precious time to master complex subjects like quantum mechanics, relativity and statistical physics – topics that are difficult to revisit later in life. “It’s much easier to learn theoretical physics as a student than to go back to it later,” she says. “It builds something more important than just knowledge: it builds your tolerance for frustration, and your capacity for deep logical thinking. You become extremely analytical and much better at breaking down problems. That’s something every employer values.”

In demand

High-energy physicists are even in high demand in fields like consulting, says Riel. A high-achieving academic has a really good chance at being hired, as long as they present their job applications effectively. When scouring applications, recruiters look for specific key words and transferable skills, so regardless of the depth or quality of your academic research, the way you present yourself really counts. Physics, Riel argues, teaches a kind of thinking that’s both analytical and resilient. With experimental physics, your application can be tailored towards hands-on experience and understanding tangible solutions to real-world problems. For theoretical physicists, your application should demonstrate logical problem-solving and thinking outside of the box. “The winning combination is having aspects of both,” says Riel.

On top of that, research in physics increases your “frustration tolerance”. Every physicist has faced failure at one point during their academic career. But their determination to persevere is what makes them resilient. Whether this is through constantly thinking on your feet, or coming up with new solutions to the same problems, this resilience is what can make a physicist’s application pierce through the others. “In physics, you face problems every day that don’t have easy answers, and you learn how to deal with that,” explains Riel. “That mindset is incredibly useful, whether you’re solving a semiconductor design problem or managing a business unit.”

Academic research is often driven by curiosity and knowledge gain, while industrial research is shaped by application

Riel champions the idea of the “T-shaped person”: someone with deep expertise in one area (the vertical stroke of the T) and broad knowledge across fields (the horizontal bar of the T). “You start by going deep – becoming the go-to person for something,” says Riel. This deep knowledge builds your credibility in your desired field: you become the expert. But after that, you need to broaden your scope and understanding.

That breadth can include moving between fields, working on interdisciplinary projects, or applying physics in new domains. “A T-shaped person brings something unique to every conversation,” adds Riel. “You’re able to connect dots that others might not even see, and that’s where a lot of innovation happens.”

Adding the bar on the T means that you can move fluidly between different fields, including through academia and industry. For this reason, Riel believes that the divide between academia and industry is less rigid than people assume, especially in large research organisations like IBM. “We sit in that middle ground,” she explains. “We publish papers. We work with universities on fundamental problems. But we also push toward real-world solutions, products and economic value.”

The difficult part is making the leap from academia to industry. “You need the confidence to make the decision, to choose between working in academia or industry,” says Riel. “At some point in your PhD, your first post-doc, or maybe even your second, you need to start applying your practical skills to industry.” Companies like IBM offer internships, PhDs, research opportunities and temporary contracts for physicists all the way from masters students to high-level post-docs. These are ideal ways to get your foot in the door of a project, get work published, grow your network and garner some of those industry-focused practical skills, regardless of the stage you are at in your academic career. “You can learn from your colleagues about economy, business strategy and ethics on the job,” says Riel. “If your team can see you using your practical skills and engaging with the business, they will be eager to help you up-skill. This may mean supporting you through further study, whether it’s an online course, or later an MBA.”

Applied knowledge

Riel notes that academic research is often driven by curiosity and knowledge gain, while industrial research is shaped by application. “US funding is often tied to applications, and they are much stronger at converting research into tangible products, whereas in Europe there is still more of a divide between knowledge creation and the next step to turn this into products,” she says. “But personally, I find it most satisfying when I can apply what I learn to something meaningful.”

That applied focus is also cyclical, she says. “At IBM, projects to develop hardware often last five to seven years. Software development projects have a much faster turnaround. You start with an idea, you prove the concept, you innovate the path to solve the engineering challenges and eventually it becomes a product. And then you start again with something new.” This is different to most projects in academia, where a researcher contributes to a small part of a very long-term project. Regardless of the timeline of the project, the skills gained from academia are invaluable.

For early-career researchers, especially those in high-energy physics, Riel’s message is reassuring: “Your analytical training is more useful than you think. Whether you stay in academia, move to industry, or float between both, your skills are always relevant. Keep learning and embracing new technologies.”

The key, she says, is to stay flexible, curious and grounded in your foundations. “Build your depth, then your breadth. Don’t be afraid of crossing boundaries. That’s where the most exciting work happens.”